Finite Dimensional Approximation of the Monodromy Operator of a Periodic Delay Differential Equation

Vol.09No.11(2018), Article ID:88878,23 pages

10.4236/am.2018.911086

Eli A. Vazquez, Joaquin Collado

Automatic Control Department, CINVESTAV-IPN, Mexico City, Mexico

Copyright © 2018 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY 4.0).

http://creativecommons.org/licenses/by/4.0/

Received: October 20, 2018; Accepted: November 27, 2018; Published: November 30, 2018

ABSTRACT

Using piecewise constant orthonormal functions, an approximation of the monodromy operator of a Linear Periodic Delay Differential Equation (PDDE) is obtained by approximating the integral equation corresponding to the PDDE as a linear operator over the space of initial conditions. This approximation allows us to consider the state space as finite dimensional resulting in a finite matrix approximation whose spectrum converges to the spectrum of the monodromy operator.

Keywords:

Monodromy Operator, Periodic Delay Differential Equations, Walsh Functions, Block Pulse Functions, Finite Rank Approximation

1. Introduction

Linear Periodic Differential Equations (PDDEs) have been of importance for studying problems of vibration, mechanics, astronomy, electric circuits, biology among others in [1] several examples of delay effects on mechanical systems are given, in [2] and [3] effects of the delay in physics and biological processes are considered. Neglecting the fact that interaction between particles does not occur instantaneously sometimes is no longer possible or practical, these finite velocity interactions bring new behaviors that modify significantly the behavior of the system, see for example [4] and [5] . In the study of these Delay Differential Equations many problems arise, mainly due to the infinite dimensional nature of the system, in the case of linear PDDEs the stability depends on the spectrum of the monodromy operator, which in the non delayed case corresponds to the monodromy matrix.

Approximation methods of the monodromy operator have been proposed a number of times, [6] and [7] make use of pseudospectral collocation methods to approximate the monodromy operator. The well known method of semidis- cretization [8] has also been used to determine the stability of a PDDE. In [9] using a Walsh approximation method from [10] a set of approximated solutions of a PDDE was used to construct an approximation of the monodromy operator by numerical integration.

In this work, the main contribution is an approximation of the monodromy operator of the PDDE by a linear Equation (35) of the form , where the directly obtained matrix will correspond to the approximated monodromy operator, with no need of approximating solutions or numerical integration. Stability of the PDDE can then be determined by the spectrum of without any need of solving any equation. Convergence of and its spectrum is stated in Theorems 10, 14 and 15. This approximation is made by projecting the integral equation corresponding to the PDDE in to a a finite dimensional subspace spanned by finitely many piecewise constant functions. The utilized functions must belong to a complete set of piecewise constant orthonormal functions with discontinuities only in dyadic rationals, such as Haar, Walsh or Block Pulse Functions (BPF). The theoretical framework of this paper will be based on Walsh functions since most results are stated for these functions. Once obtained the finite dimensional approximation of the monodromy operator will be stated in terms of BPF to reduce the computational cost of the stability analysis.

The main goal of this paper is to provide a computationally light method, with straightforward implementation, to approximate the monodromy operator of a delay differential equation, in order to facilitate the computation of stability diagrams used to study the behavior of the equation with respect to changes in its parameters.

2. Linear Periodic Delay Differential Equations

Consider the linear PDDE:

(1)

where , , , are matrices of ω-periodic functions continuous on . Denote as the space of conti- nuous functions from into , this space is a Banach space with the norm [11] .

A solution of (1) with initial condition at is understood as a mapping , such that for and that for and , where sa- tisfies (1) for with for . At time t a solution will be an element of space C. Explicitly we will have:

(2)

with . The operator T is called the solution mapping and is analogous to the state transition matrix of the undelayed case.

2.1. Monodromy Operator

Taking into account the periodic nature of (1) it is relevant to consider (2) when , in this case we will denote the solution mapping as .

Next some properties consequence of being completely continuous are enlisted, [12] :

• The spectrum is a countable compact set in the complex plane.

• , and if then has a nonzero solution .

• If the cardinality of the set is infinite then the only limit point of is 0.

• The cardinality of outside any disc of radius r, is finite.

• .

henceforth denoted simply as U will be called the monodromy opera- tor of (1) and the elements , will be called characteristic multipliers.

2.2. Stability

It was shown [12] that Floquet theory is valid for PDDEs in a certain sense, and that stability of (1) will be related to the spectrum of U. The following theorem from [13] establishes the conditions for the stability of (1):

Theorem 1. If the characteristic multipliers are situated inside the unit circle , then the zero solution of the system is uniformly asymptotically stable. If the multipliers of the system are inside the closed unit circle, and if multipliers situated on the unit circumference correspond to simple elementary divisors then the zero solution is uniformly stable.

Remark 1. If all the above statements are valid for , where and [12] .

Remark 2. Note that Theorem 1 extends the properties of an undelayed case [14] [15] .

3. Walsh Functions

3.1. Definition

Walsh functions are a set of piecewise constant complete orthonormal functions introduced in [16] and defined on the interval , although easily translated to any other interval. Formal definition of the Walsh functions may be done in many ways [17] , with the use of Rademacher functions the l-th Walsh function may be defined as [17] :

(3)

where the are the coefficients of the unique binary expansion of , namely

(4)

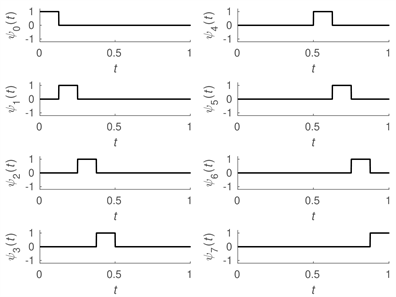

There are as well many ways of ordering the Walsh functions, in this paper we will use the so called Dyadic ordering [18] . Figure 1 shows the first eight Walsh functions in this ordering.

To a function will correspond the Walsh approximation:

(5)

where The right side of (5) will converge in norm to f for any and if , it will converge uniformly for any continuous function that has, at most, jump discontinuities at dyadic rationals (numbers of the form ) [16] . When , (5) will be called a Walsh expan- sion.

3.2. Properties of Walsh Functions

We will define a Walsh matrix as the matrix consisting on the discretization values of the Walsh functions over the interval .

For example we will have for :

We can also define the Delayed Walsh Matrix as a Walsh matrix of order shifted m columns to the right and with the first m columns being zeros. In the same manner we define a Forwarded Walsh Matrix , but shifted to the left:

Figure 1. Walsh functions in dyadic ordering.

(6)

We define the vector consisting on the first Walsh functions as:

(7)

Since the order of the approximation should be clear from context we write simply . We define as well the dyadic sum between tho nonnegative integers as:

(8)

where and are respectively the coefficients of the binary expansions of q and r as in (4). To a vector we associate a sym- metric matrix whose i, jth entry will be given by . If for example , then:

We make use of the following Lemma:

Lemma 2. [19] Let , then:

(9)

The integral of a Walsh vector can be approximated by a Walsh expansion of the form:

(10)

where P is given by [20] :

(11)

(11)

The approximated integral converges to the exact integral uniformly [21] .

To a function matrix we associate the Walsh approximation :

(12)

where

(13)

and each is the vector of coefficients of the Walsh approximation of each entry of .

Let be a function matrix with Walsh approximation and a function vector with Walsh approximation , whose elements are of the form , where is the vector of Walsh coefficients of the approximation of each element of f. Define , then from (9) we have:

(14)

where:

(15)

3.3. Approximation Error

Regarding the error of Walsh approximation we have:

Lemma 3. [17] If f satisfies the Lipschitz condition, then:

(16)

for some constant C.

3.4. Block Pulse Functions

The set of Block pulse functions of order p is defined on the interval as the set , where [22] :

(17)

Block Pulse Functions, shown on Figure 2, are orthogonal, easily normalizable [23] and when they form a complete set [22] . We restrict ourselves to

Figure 2. Block pulse functions.

the case for k integer. Walsh functions and Block Pulse Functions are related by a one on one correspondence, meaning that there exists a unique bijective linear transformation that maps the first Walsh functions on to the set of Block Pulse Functions of order [24] . The existence of this transfor- mation and the completeness of the Block Pulse functions ensure that the properties of Walsh functions are inherited to Block Pulse Functions. In particular we have the following:

Lemma 4. [24] Matrix P in (10) is similar to the upper triangular matrix , where Q is a nilpotent matrix

with ones above the diagonal and zeros everywhere else:

(18)

Lemma 5. [25] Let be the Walsh approximation of the function , with being the vector of coefficients of the Walsh approximation. Then is similar to a diagonal matrix:

(19)

4. Approximation of the Monodromy Operator

Without loss of generality we assume . We approximate the monodromy operator by projecting (1) on a finite dimensional subspace of formed by the span of piecewise constant orthonormal functions. We will assume the orthonormal functions to be Walsh functions, the analysis can be carried to the case of Block Pulse Functions or Haar functions by means of similarity transformations. We also restrict ourselves to the case of commensurable delays, that is for some .

Integrating (1) from 0 to t we will have:

(20)

with for . A solution of (20) will correspond to a solution of (1) [26] . Let denote the projection mapping that takes the Walsh expansion to the Walsh approximation

. Along with (20) we introduce its projection onto the space

:

(21)

where and since it is a constant. and correspond to and , this is, the approximations of and , respectively, as in (12). is still not defined for , we cant define yet the projection of the initial condition since its defined on a different domain than the domain of definition of the Walsh functions. For this we have:

Proposition 6. The value of the Walsh approximation of order at an interval , , depends only on the value of the function at that same interval.

Proof. It follows immediately from Theorem 2.1.3 in [17] .

Thus we define the projection as the Walsh approximation of order of an integrable function defined on , that is equal to in and 0 everywhere else, and we make for . Since Walsh functions were not defined at

we simply set .

We split the second integral in (20) as:

(22)

where is the unit step function.

We make use of the following Lemma:

Lemma 7. [17] Any function constant on the intervals of the form , can be represented in the form:

(23)

i.e. the non zero coefficients of the Walsh expansion of have indices no greater than . Moreover, this representation is unique.

From linearity of and from Lemma 7. we have that we can split the second integral in (21) as in (22) with this being consistent with the projection:

(24)

Since we have that , we also have that with each and being vectors of Walsh coefficients. Defining , , and recalling (7) and (12), we can write (21) as:

(25)

From Lemma 7. we have that both, and , can be uniquely represented in terms of :

(26)

and are block diagonal matrices, whose diagonal entries are given respectively by and , which satisfy and When ap- proximating by Walsh functions, matrix is given by and it is called the Walsh Shift Operator [10] . is given in an analogous way as . The term will also have an unique representation:

(27)

since . will be again a block diagonal matrix, whose diagonal entries are given by . In the case of Walsh functions, matrix evaluates at time and assigns its value to the coefficient of the constant Walsh function , hence .

From (14), (27) and (26) we have that we can write (25) as:

(28)

From (10) we have that:

(29)

where is a matrix given by:

(30)

from which we arrive at:

(31)

Since is nonsingular we have:

(32)

From (32) we can define a mapping :

(33)

However by construction we can see that the domain of is in fact the subspace of M consisting of al functions generated by linear combinations of the first Walsh functions, that are equal to 0 for , we denote this subspace as . Likewise we can extend the domain of definition of the solution map of (1) from the space , to the subspace of consisting of al functions that are continuous on that are equal to 0 for , this space, denoted is isomorphic to the space and its projection on the space M corresponds to . We can say now that is an approximation of the solution mapping T of (1), we obtain an approximated solution which satisfies (21), and thus, we have an approximation of the solution map of (1).

In order to study the monodromy operator of (1), we must study the state at , this is, we must know the solution of (1) for corresponding to an initial condition. Since is the projection in the space M of and since is isomorphic to , the approximation of the state at will be given by the projection of the approximated solution into . Hence, by Lemma 7 we will have:

(34)

where is a is a block diagonal matrix which projects the approximated solution into the subspace , with diagonal entries . In the case of Walsh functions, we will have

where are given by Walsh matrices of order k that instead have 0s in the first (1 − m)th columns.

From (34) we obtain our approximated monodromy operator, given by:

(35)

Approximation by Block Pulse Functions

Equation (35) for the approximation of is valid for all sets of orthonormal functions that can be obtained by linear combinations of Walsh functions. For the numerical calculation of the approximation of the monodromy operator, Block Pulse Functions are the most advantageous set since with their simplicity comes a lesser computational load. In particular for the Block Pulse Function approximation we will have:

(36)

(37)

(38)

If we define:

(39)

we will have:

(40)

and

(41)

Finally, if in (15) we have , then will be given as in (15) with:

(42)

where

(43)

with the same for and .

5. Convergence of

We denote as the space of functions from to , whose limit from the left exist at every point in and whose limit from the right exists at every point in and that are also continuous at every point that is not a dyadic rational, this is, is the space of continuous functions on that may have jump discontinuities at dyadic rationals.

Proposition 8. The space is a Banach space with the sup norm.

Proof. Let be a Cauchy sequence in , then , such that implies:

Therefore for every we have , for . Thus is a Cauchy sequence in , therefore convergent, we denote such limit as , then we have that for every , there exists N, such that for :

this is converges to uniformly.

Now let , then for every the limit from the right at exists, this is, , such that for every , there exist such that:

(44)

The sequence is defined on , therefore is convergent if and only if it is Cauchy. We assume by contradiction that is not Cauchy, then there exist such that for every , there exist such that:

(45)

Since is Cauchy we have that there exists such that implies:

Let such that (45) holds. Let such that for we have:

similarly let such that we have:

Take , then for we have:

but and , therefore:

which is a contradiction, therefore the sequence converges to a limit .

Now let , let and such that for :

then:

Therefore is a right limit of at , hence the right limits exist. Repeating the process for the left limits we conclude that the left limits of exist where required.

Now let , not a dyadic rational, then each is continuous at , therefore is continuous at . Then we have , which concludes the proof.

We make use of the following:

Lemma 9. [20] Let the dyadic intervals , with

and let be the characteristic function of the interval , then:

(46)

Now we state:

Theorem 10. Let the Banach space , the approximated monodromy operator converges in the strong operator sense to the monodromy operator U.

Proof. Let denote the projection mapping that takes the Walsh expansion to the Walsh approximation . Without risk of confusion we will denote as the operator and as to the operator .

Denote as the solution of (20) corresponding to an initial condition for . Likewise denote as the solu- tion of (21). Clearly and for

Let , and . Then:

(47)

Since the solution is continuous we have that the first term on the right converges to 0 as . We now observe that and satisfy:

(48)

and

(49)

respectively. Set , , the same way set and as their respective approximations. Define . We have:

(50)

(51)

We have that the right side of (51) is piecewise constant, so if we define the dyadic intervals , for with , we can write:

(52)

where is the characteristic function of the interval . From Lemma 9, we will have for :

(53)

Since is constant on the dyadic intervals then for . Therefore:

(54)

Define , and assume . This restraint is not significant since and are bounded, therefore and are bounded, and as .

We have:

(55)

From all of the above and defining , with and denoting:

we arrive at:

(56)

Which gives:

(57)

Indeed, for , , and assuming we have:

(58)

For we have , then , therefore:

(59)

Taking into account the definition of we have:

(60)

Since and we assumed , then:

(61)

and

(62)

From (61), (62) and (59) we arrive at:

(63)

The expresion is bounded, and clearly , and since , we have as , therefore we have as , this is:

(64)

from where it follows immediately that:

(65)

which concludes the proof.

Corollary 1. If is Lipschitz, then the error of the approximation , satisfies .

Proof. From (59), we have that since is Lipschitz and is differentiable, the result follows immediately from Lemma 3.

We now prove that the approximated solution is indeed equal to the Walsh approximation of the exact solution. First we state:

Lemma 11. [21] Let be the Walsh expansion of a function , if converges to zero everywhere, then , .

Now we are ready to prove:

Theorem 12. Let , the solution of (20) corresponding to an initial condition for . Likewise let , the solution of (21), then .

Proof. We have and , for some coefficients and , then :

(66)

Since the solution is continuous and from (64) we have that the two terms on the right converge to zero, therefore converges to zero uniformly, hence everywhere, and from Lemma 11. we have , for , this is

Corollary 2. ,

Lemma 13. Let compact, then for very , there exists , such that:

(67)

Proof. Let , since X is compact, there exist finite open balls of radius centered around finite that cover X, then , , for some . Let k such that for any , . Let , then for some :

Since there are finitely many , the desired result follows immediately.

The solution of (1) will be given by:

(68)

where X is a solution matrix such that and for [13] . If then the solution will be continuous and the solution matrix X will be the same that in . Furthermore we will have a bounded operator:

(69)

and like in , we will have that T maps arbitrary bounded sequences into equicontinuous sequences, then by the Arzelá-Ascoli Theorem we will have that T is compact in .

We now have:

Theorem 14 The approximated monodromy operator converges uni- formly to the monodromy operator U.

Proof. Let denote the closed unit ball on . Since T is compact, then the image is also compact, and by Lemma 13 we will have that for any there exists k such that:

(70)

The fact that as follows immediately.

With this we can establish convergence of the spectrum:

Theorem 15. The spectrum of the approximated monodromy operator converges to the spectrum of the monodromy operator U. More precisely, for any open set , such that , then there exist K such that , for any . Furthermore, let and let be a small circle centered at such that any other eigenvalue of U is outside , then the sum of the multiplicities of the eigenvalues of within will be equal to the multiplicity of .

Proof. Let be a small circle with center at , such that any other eigenvalue of U is outside this circle. Then the spectral projection of is given by:

(71)

The spectral projection of the spectrum of inside will be given by:

(72)

Since , then converges uniformly to P, therefore, there must exist k such that part of the spectrum of is in .

Since P and are finite dimensional operators and since converges uniformly to P, we will have that for sufficiently large k:

(73)

this is, the algebraic multiplicity of will be the same as the algebraic multiplicity of the spectrum of contained in .

6. Approximation of the Solution of a Delayed Mathieu Equation

If one considers a generalization of a Mathieu Differential Equation to a Functional Differential Equation, we will find that we can consider different cases depending on where the parametric excitation is placed [9] . We consider the scalar equation:

(74)

The operator in (33) provides a natural way to approximate the solution of a periodic delay differential equation. Consider Equation (74), with ,

, , and . Figure 3 and Figure 4, show the comparison

between the solution obtained by simulation and the approximation of the solution obtained from , for and respectively, for .

Figure 5 and Figure 6, show the magnitude of the error for the appro- ximation of the solution obtained with the approximated operator , for and , respectively.

Figure 3. Solution of (74) for . The solid line corresponds to the simulated solution and the dashed line corresponds to the approximated solution for .

Figure 4. Solution of (74) for . The solid line corresponds to the simulated solution and the dashed line corresponds to the approximated solution for .

Figure 5. Magnitude of error of the approximated solution corresponding to .

Figure 6. Magnitude of error of the approximate solution corresponding to .

7. Stability Chart of the Delayed Mathieu Equation

We will use the approximated monodromy operator to determine the stability chart of a particular case of delayed Mathieu equation:

(75)

Stability charts are used to to determine the stability of periodic differential equations with respect to some parameters. Figure 7 shows the stability diagram

of (75) for the plane with and . For this equation stability

zones are disconnected and there is no symmetry with respect to the horizontal axis, contrary to the case of the undelayed Mathieu equation.

Now consider the equation:

(76)

This equation has been studied in [8] . Figure 8 shows the stability diagram for the parametric plane with and .

8. Conclusion

The use of Walsh functions provides the finite dimensional approximation (35) of the monodromy operator. This approximation and the analysis leading to it are virtually the same for any piecewise constant orthonormal basis which can be formed by linear combinations of Walsh functions such as Block Pulse Functions. The use of Block Pulse Functions provides a computationally

Figure 7. Stability diagram for the parametric plane αβ of Equation (75). Asymptotically stable zones (grey) and unstable zones (white) are shown.

Figure 8. Stability diagram for the parametric plane αβ of Equation (76). Asymptotically stable zones (grey) and unstable zones (white) are shown.

inexpensive method that is useful when obtaining stability diagrams. The rate of decay of the error will be maintained regardless of the orthonormal set used [27] . The use of Block Pulse Functions provides a method with a light computational load, due to the simplicity of the functions and the sparse structure of the involved matrices. Implementation of the algorithm is straightforward, it is only necessary to compute matrices (42) corresponding to the matrices and in (1), and to substitute the remaining matrices in Section 4.1. Furthermore, the approximated monodromy operator might be used to provide insight in the nature of the PDDE, specially with a second order equation if the solution space is confined to a two dimensional vector space [28] , since a similar approximation with the use of Block Pulse Functions has been used to analytically prove properties of Periodic Ordinary Differential Equations. [29] . However a downside of the proposed method lies in the rate of convergence, which for certain cases is slower than the convergence of Fourier functions, and is certainly slower than the rate of convergence of approximations with, for example, Chebyshev polynomials.

Fund

This work was supported by CONACyT, Mexico, CVU 418725.

Conflicts of Interest

The authors declare no conflicts of interest regarding the publication of this paper.

Cite this paper

Vazquez, E.A. and Collado, J. (2018) Finite Dimensional Approximation of the Monodromy Operator of a Periodic Delay Differential Equation with Piecewise Constant Orthonormal Func- tions. Applied Mathematics, 9, 1315-1337. https://doi.org/10.4236/am.2018.911086

References

- 1. Kolmanovskii, V. and Myshkis, A. (2013) Introduction to the Theory and Applications of Functional Differential Equations. Springer Science & Business Media, Vol. 463.

- 2. Cui, H.-Y., Han, Z.-J. and Xu, G.-Q. (2016) Stabilization for Schrdinger Equation with a Time Delay in the Boundary Input. Applicable Analysis, 95, 963-977. https://doi.org/10.1080/00036811.2015.1047830

- 3. Srividhya, J. and Gopinathan, M. (2006) A Simple Time Delay Model for Eukaryotic Cell Cycle. Journal of Theoretical Biology, 241, 617-627. https://doi.org/10.1016/j.jtbi.2005.12.020

- 4. Datko, R., Lagnese, J. and Polis, M. (1986) An Example on the Effect of Time Delays in Boundary Feedback Stabilization of Wave Equations. SIAM Journal on Control and Optimization, 24, 152-156. https://doi.org/10.1137/0324007

- 5. Datko, R. and You, Y. (1991) Some Second-Order Vibrating Systems Cannot Tolerate Small Time Delays in Their Damping. Journal of Optimization Theory and Applications, 70, 521-537. https://doi.org/10.1007/BF00941300

- 6. Gilsinn, D.E., Potra, F.A., et al. (2006) Integral Operators and Delay Differential Equations. Journal of Integral Equations and Applications, 18, 297-336. https://doi.org/10.1216/jiea/1181075393

- 7. Breda, D., Maset, S. and Vermiglio, R. (2014) Pseudospectral Methods for Stability Analysis of Delayed Dynamical Systems. International Journal of Dynamics and Control, 2, 143-153. https://doi.org/10.1007/s40435-013-0041-x

- 8. Insperger, T. and Stepan, G. (2002) Semi-Discretization Method for Delayed Systems. International Journal for Numerical Methods in Engineering, 55, 503-518. https://doi.org/10.1002/nme.505

- 9. Vazquez, E.A. and Collado, J. (2016) Monodromy Operator Approximation of Periodic Delay Differential Equations by Walsh Functions. 13th International Conference on Electrical Engineering, Computing Science and Automatic Control (CCE), IEEE.

- 10. Chen, W.-L. and Shih, Y.-P. (1978) Shift Walsh Matrix and Delay-Differential Equations. IEEE Transactions on Automatic Control, 23, 1023-1028. https://doi.org/10.1109/TAC.1978.1101888

- 11. Kreyszig, E. (1978) Introductory Functional Analysis with Applications, Ser. Wiley Classics Library. John Wiley & Sons.

- 12. Stokes, A. (1962) A Floquet Theory for Functional Differential Equation. Proceedings of the National Academy of Sciences of the United States of America, 48, 1330. https://doi.org/10.1073/pnas.48.8.1330

- 13. Halanay, A. (1966) Differential Equations: Stability, Oscillations, Time Lags. Academic Press, 23.

- 14. Yakubovich, V.A. and Starzhinski, V.M. (1975) Linear Differential Equations with Periodic Coefficients. Vol. 1, John Wiley, New York.

- 15. Magnus, W. and Winkler, S. (2004) Hill’s Equation, Ser. Dover Books on Mathematics Series. Dover Publications.

- 16. Walsh, J.L. (1923) A Closed Set of Normal Orthogonal Functions. American Journal of Mathematics, 45, 5-24. https://doi.org/10.2307/2387224

- 17. Golubov, B., Efimov, A. and Skvortsov, V. (2012) Walsh Series and Transforms: Theory and Applications. Springer Science & Business Media, Berlin, Vol. 64.

- 18. Ahmed, N. and Rao, K.R. (2012) Orthogonal Transforms for Digital Signal Proces-sing. Springer Science & Business Media, Berlin.

- 19. Karanam, V., Frick, P. and Mohler, R. (1978) Bilinear System Identification by Walsh Functions. IEEE Transactions on Automatic Control, 23, 709-713. https://doi.org/10.1109/TAC.1978.1101806

- 20. Gát, G. and Toledo, R. (2015) Estimating the Error of the Numerical Solution of Linear Differential Equations with Constant Coefficients via Walsh Polynomials. Acta Mathematica Academiae Paedagogicae Nyíregyháziensis, 31, 309-330.

- 21. Fine, N.J. (1949) On the Walsh Functions. Transactions of the American Mathematical Society, 65, 372-414. https://doi.org/10.1090/S0002-9947-1949-0032833-2

- 22. Kwong, C. and Chen, C. (1981) The Convergence Properties of Block-Pulse Series. International Journal of Systems Science, 12, 745-751. https://doi.org/10.1080/00207728108963780

- 23. Rao, G. and Srinivasan, T. (1978) Remarks on “Author’s Reply”; to “Comments on Design of Piecewise Constant Gains for Optimal Control via Walsh Functions”. IEEE Transactions on Automatic Control, 23, 762-763. https://doi.org/10.1109/TAC.1978.1101782

- 24. Cheng, C., Tsay, Y. and Wu, T. (1977) Walsh Operational Matrices for Fractional Calculus and Their Application to Distributed Systems. Journal of the Franklin Institute, 303, 267-284. https://doi.org/10.1016/0016-0032(77)90029-1

- 25. Gulamhusein, M. (1973) Simple Matrix-Theory Proof of the Discrete Dyadic Convolution Theorem. Electronics Letters, 9, 238-239. https://doi.org/10.1049/el:19730172

- 26. Hale, J.K. and Lunel, S.M.V. (2013) Introduction to Functional Differential Equations. Springer Science & Business Media, Berlin, Vol. 99.

- 27. Deb, A., Sarkar, G. and Sen, S.K. (1994) Block Pulse Functions, the Most Fundamental of All Piecewise Constant Basis Functions. International Journal of Systems Science, 25, 351-363. https://doi.org/10.1080/00207729408928964

- 28. Norkin, S. (1973) Introduction to the Theory and Application of Differential Equations with Deviating Arguments. Academic Press, Cambridge, Vol. 105. https://doi.org/10.1016/S0076-5392(08)62969-0

- 29. Franco, C. and Collado, J. (2017) A Novel Discriminant Approximation of Periodic Differential Equations.

上一篇:Ordered Rate Constitutive Theo 下一篇:Random Attractors of Stochasti

最新文章NEWS

- Ordered Rate Constitutive Theories for Non-Classical Thermoviscoelastic Fluids with Internal Rotatio

- Modeling and Numerical Solution of a Cancer Therapy Optimal Control Problem

- Extended Wiener Measure by Nonstandard Analysis for Financial Time Series

- Methodology for Constructing a Short-Term Event Risk Score in Heart Failure Patients

- Application of Conjugate Gradient Approach for Nonlinear Optimal Control Problem with Model-Reality

- On the Dynamics of Transition of a Classical System to Equilibrium State

- On the ECI and CEI of Boron-Nitrogen Fullerenes

- Binomial Hadamard Series and Inequalities over the Spectra of a Strongly Regular Graph

推荐期刊Tui Jian

- Chinese Journal of Integrative Medicine

- Journal of Genetics and Genomics

- Journal of Bionic Engineering

- Pedosphere

- Chinese Journal of Structural Chemistry

- Nuclear Science and Techniques

- 《传媒》

- 《中学生报》教研周刊

热点文章HOT

- On Existence of Periodic Solutions of Certain Second Order Nonlinear Ordinary Differential Equations

- Ordered Rate Constitutive Theories for Non-Classical Thermoviscoelastic Fluids with Internal Rotatio

- Application of Conjugate Gradient Approach for Nonlinear Optimal Control Problem with Model-Reality

- Fuzzy Modelling for Predicting the Risk of Recurrence and Progression of Superficial Bladder Tumors

- Integer Wavelet-Based Image Interpolation in Lifting Structure for Image Resolution Enhancement

- An Alternative Analysis on Nilsson-Ehle’s Hybridization Experiment in Wheat —Theory of Dual Multiple

- Modeling and Numerical Solution of a Cancer Therapy Optimal Control Problem

- Extended Wiener Measure by Nonstandard Analysis for Financial Time Series