Integer Wavelet-Based Image Interpolation in Lifting Structure for Image Resolution Enhancement

Vol.09No.10(2018), Article ID:88003,23 pages

10.4236/am.2018.910077

Chin-Yu Chen1, Shu-Mei Guo2*, Ching-Hsuan Tsai2, Jason Sheng-Hong Tsai3

1Department of Radiology, Chi-Mei Medical Center, Taiwan

2Department of Computer Science and Information Engineering, National Cheng-Kung University, Taiwan

3Department of Electrical Engineering, National Cheng-Kung University, Taiwan

Copyright © 2018 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY 4.0).

http://creativecommons.org/licenses/by/4.0/

Received: January 3, 2017; Accepted: October 22, 2018; Published: October 25, 2018

ABSTRACT

A floating-point wavelet-based and an integer wavelet-based image interpolations in lifting structures and polynomial curve fitting for image resolution enhancement are proposed in this paper. The proposed prediction methods estimate high-frequency wavelet coefficients of the original image based on the available low-frequency wavelet coefficients, so that the original image can be reconstructed by using the proposed prediction method. To further improve the reconstruction performance, we use polynomial curve fitting to build relationships between actual high-frequency wavelet coefficients and estimated high-frequency wavelet coefficients. Results of the proposed prediction algorithm for different wavelet transforms are compared to show the proposed prediction algorithm outperforms other methods.

Keywords:

Image Compression, Image Interpolation, Wavelet Transform, Integer Wavelet Transform, Polynomial Curve Fitting

1. Introduction

Resolution of an image has been always an important issue in many image- and video-processing applications. Image interpolation can be used for image resolution enhancement and many interpolation techniques have been developed to increase the quality of this task. Image and video codings, such as spatial scalability and transcoding, rely on image interpolation methods. Hence, these require fast and accurate interpolation methods.

Recently, various interpolation methods are proposed to improve the performance of image resolution enhancement, such as bilinear [1] and bicubic [2] interpolation methods. Bilinear is simple to be implemented and the computation complexity is low. However, this method fails to capture the value changes near the edge and blurs the image details. Bicubic interpolation is another popular approach used for resolution enhancement. It has high accuracy but its high computation complexity results in heavy time consumption. Besides, the performance of bicubic convolution is poor in edge regions of images. In [3] , Guo et al. combined bilinear interpolation and discrete cosine transform (DCT) to improve qualities of images, but it still suffers from high computation time.

Another approach for image interpolation is wavelet-based method which can mitigate the problems of blurring and contrast abating. These algorithms predict the high-pass filtered coefficients, and the reconstructed image can be obtained by these estimated coefficients. Hence, the main problem of wavelet-based interpolation is how to estimate the high-pass coefficients correctly. In [4] , detail coefficients in the strong edge area are estimated using the regularity of wavelet transform in coarser subbands. However, it only estimates the coefficients on the region with strong edges, so that its improvement is limited. Temizel and Vlachos [5] used a method that exploits wavelet coefficient correlation in a local neighborhood sense and employs linear least-squares regression to estimate the unknown detail coefficients for image-resolution enhancement. Piao et al. [6] proposed an image-resolution enhancement approach using intersubband correlation in the wavelet domain. However, linear least-squares regression cannot obtain the optimal solutions. The methods based on the probability model usually employ the hidden Markov tree (HMT) model. The HMT scheme can effectively capture the interscale transition characteristics and intrascale statistics. This scheme reflects magnitude of coefficients, but none of the signs of coefficients is reflected. Besides, the accuracy of the prediction of the signs of coefficients is limited [7] [8] .

Kim et al. [9] used the multilayer perceptron (MLP) neural networks to train a mapping from the coarser scale to the finer scale for each specified image. The weakness of this method is the limited scaling factor. Lee et al. [10] proposed a different Haar-transform-based lifting filter to predict high-frequency subbands from the LL-band for wavelet-based interpolation scheme to enhance resolution of medical images. Guo et al. [11] proposed novel 5/3 and 9/7 wavelet-based image interpolations in lifting structures for image resolution enhancement. Demirel and Anbarjafari [12] proposed the methods by using difference between low resolution image and interpolated low-frequency subband as an intermediate stage for estimating the high-frequency subbands to achieve a sharper satellite image. Chavez-Roman et al. [13] designed the technique which is based on additional edge preservation procedure and mutual interpolation between the high frequency subband images performed via the discrete wavelet transform (DWT) and the input low resolution image. In [14] , a wavelet-domain approach based on the dual-tree complex wavelet transform (DT-CWT) and nonlocal means (NLM) is proposed for resolution enhancement of the satellite images. In [15] [16] , an image resolution enhancement technique based on interpolation of the high frequency subband images is obtained by DWT and the difference image. The edges are enhanced by using stationary wavelet transform (SWT). Then all these subbands are combined to generate a new high resolution image by using inverse DWT (IDWT). Furthermore, in [16] , generalized histogram equalization (GHE) is used to obtain high resolution and high contrast satellite image.

Invertible wavelet transforms that map integers to integers [17] are important in the applications of lossless coding [18] [19] . In this paper, we propose floating-point wavelet-based and integer wavelet-based image interpolations in lifting structures. It uses the low-band wavelet coefficients to estimate high-frequency wavelet coefficients of the original image, so that the original image can be reconstructed from the low-band wavelet coefficients by using the proposed prediction algorithms. Furthermore, the proposed method utilizes linear relations between original high-frequency coefficients and estimated high-frequency coefficients to further improve the accuracy of the predicted high-frequency coefficients. The coefficients of predicted high-frequency subbands can be formed in floating-point or in integers. And then, the inverse wavelet transform is performed for synthesis of an interpolated image.

The paper is organized as follows: The standard interpolation method and a brief overview of wavelet transform and reversible integer wavelet transforms are introduced in Section 2. Section 3 derives the proposed methods. Experiment results for the proposed methods are given in Section 4. Finally, Section 5 provides conclusions and future works.

2. Background

This section first introduces the common interpolation concept and its main drawback in Section 2.1. The detailed description of wavelet transform is in [20] . Section 2.2 simply presents the concepts of the wavelet transform. Then, the wavelet-based interpolation framework with lifting structure [21] is described in Section 2.3. Reversible integer wavelet transforms and their advantages in compression systems are introduced in the following subsection.

2.1. Image Interpolation

The definition of interpolation is to determine the parameters of a continuous image representation from a set of discrete points. The resolution enhancement process can be conceptually regarded as a two-step operation. Initially, the discrete data is interpolated into a continuous curve. Second, for the additional samples, we need to stuff those added points with values to be determined. Here, the simplest method―bilinear interpolation―is selected to demonstrate the process of interpolation. Let the original image be denoted by f and the interpolated image be . In the following example, the interpolation ratio is assumed to be 2. In order to simplify the process, the one-dimensional linear interpolation is separately applied in vertical and horizontal directions of an image to achieve the two-dimensional bilinear interpolation. The bilinear interpolation with an interpolation ratio 2 can be formulated as

(1a)

(1b)

where . After performing the bilinear interpolation, the image can be zoomed in or out.

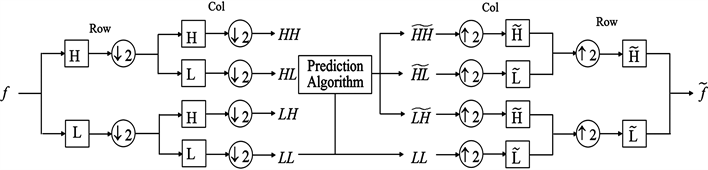

Wavelet-based interpolation is to predict high-frequency subbands, LH-band, HL-band, and HH-band, from the low-frequency subband. Figure 1 shows the forward two-dimensional wavelet transform, and the right side is the backward wavelet transform. By assuming that the input is a low-pass filtered image (LL-band), the unknown high-frequency subbands can be predicted from the low-frequency subband. The easiest prediction algorithm is shown in Figure 1. is to pad zero values to high-frequency subbands as LH-band, HL-band, and HH-band. However, the image interpolation model in wavelet transform is desired to present a better prediction algorithm for effectively predicting the high-frequency subbands.

Because bilinear interpolation assumes the original data are first-derivative continuous, the result is usually blurred when it interpolates the points at edges. Nevertheless, the wavelet-based interpolation can avoid this artifact by its good approximation property.

2.2. Wavelet Transform

Wavelet transform is a valuable tool for image compression. It provides efficient time-frequency localization and multiresolution analysis; thus, it is suitable for image interpolation. Wavelet transformation decomposes data into different subbands hierarchically and each high-frequency subband can locate the regions of edges and details in the original image. Figure 2 shows single stage of a two-channel analysis and synthesis filter bank. In Figure 2, h0 and h1 are analysis filters, and g0 and g1 are synthesis filters. In the analysis step an input signal x is filtered with h0 and h1 and downsampled to generate the low-pass band s and the high-pass band d. In the synthesis step s and d are upsampled and filtered with g0 and g1. The sum of the filter outputs results in the reconstructed signal .

Figure 1. Image interpolation model in wavelet transform.

Two-dimensional wavelet transform can be implemented using a one-dimensional filter on an image in each column vertically and in each row horizontally, which induces four subbands (i.e., LL-, LH-, HL-, and HH-band). The LL-band is the approximation of the original image, the LH-band represents vertical information, the HL-band represents horizontal information, and the HH-band represents diagonal information shown in Figure 3. The LH-, HL-, and HH-subbands will be estimated from the LL-band in our framework for image resolution enhancement.

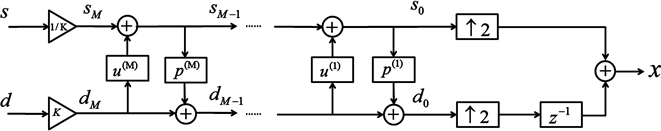

2.3. Lifting Scheme

The wavelet transform uses two filters L and H to conduct the convolution operation. However, such convolution operation suffers from high computation cost and requires more memory for storage. Thus, an improved approach called lifting scheme for computing the discrete wavelet transform was developed. Any discrete wavelet transform can be computed with this scheme, and almost all these transforms have reduced computational complexity compared with the standard filtering algorithm. In this scheme a trivial wavelet transform, called lazy transform, is computed. This transform splits the input signal into even- and odd-indexed sequences.

(2a)

(2b)

Figure 2. Wavelet analysis and synthesis.

Figure 3. Two-dimensional wavelet transform in four subbands.

Next, dual lifting and lifting steps are applied to obtain

(3a)

(3b)

Figure 4 illustrates the above process with M pairs of dual and lifting steps. The samples become the low-pass coefficients and the samples become the high-pass coefficients when scaled with a factor K:

(4a)

(4b)

We can find the inverse transform by reversing the operations and flipping the signs. The inverse transform is illustrated in Figure 5.

(5a)

(5b)

Then undo the M lifting steps and dual lifting steps to obtain

(6a)

(6b)

Finally, the even and odd samples is retrieved

(7a)

(7b)

2.4. Integer Wavelet Transform

The wavelet transform produces floating-point coefficients in most cases. Although this allows perfect reconstruction of the original image, the use of finite-precision

Figure 4. The forward wavelet transform using lifting.

Figure 5. The inverse wavelet transform using lifting.

arithmetic and quantization results in lossy compression. For lossless compression, integer transform are needed. Traditionally, integer wavelet transform are difficulty to construct. However, the construction becomes very simple with lifting scheme. Since we can write every wavelet transform using lifting, it was shown that an integer version of every floating-point wavelet transform can be built by use of the lifting scheme. Integer wavelet transforms can be created by rounding-off the result of each dual lifting and lifting steps before adding and subtracting.

The dual lifting and the lifting step thus becomes

(8a)

(8b)

It is invertible and the inverse can be obtained by reversing the lifting and the dual lifting steps and flipping signs:

(9a)

(9b)

This obviously results in an integer to integer transform. But the coefficients and are not necessarily integers. Thus computing the integer transform coefficients still requires floating-point operations. However, all floating-point operations can be avoided when rational coefficients are power of two denominators in the transform. Here, we use the following integer wavelet transforms of the form , where N is the number of vanishing moments of the analyzing high pass filter, while is the number of vanishing moments of the synthesizing high pass filter. The S is for sequence, and the P is for prediction in transform. transform is inspired by the transform, using one extra lifting step to build the earlier into a transform with four vanishing moments of the high pass analyzing filter. The resulting transform is different from the earlier transform and therefore is called the transform. The analysis low pass filter of has nine coefficients, while the analysis high pass filter has seven coefficients. Both analysis and synthesis high pass filters have four vanishing moments. 2/6 transform has two coefficients of the analysis low pass filter, while the analysis high pass filter has six coefficients. 2/6 transform is a version of the transform.

(10)

(11)

(12)

(13)

(14)

(15)

(16)

(17)

(18)

3. Proposed Method

This section illustrates the proposed prediction algorithm using the low-frequency coefficients to estimate the unknown high-frequency coefficients in order to enhance resolution of images to obtain a well-reconstructed image.

3.1. Prediction

Padding zero values into high-frequency subbands is an easy way to reconstruct the image. However, it causes blurred image. The proposed prediction method can solve this problem. The proposed prediction algorithm can estimate high-frequency subbands by

(19a)

(19b)

(19c)

where I is the proposed low-pass prediction filter and J is the proposed high-pass prediction filter to each row and column of an image, notation * represents a convolution operator, and LL is the input low-pass filtered image. The next subsection derives according to filters as an example to explain the algorithm.

3.2. (4,2) Filter-Based Prediction Algorithm

The lifting of filter is shown in Figure 6, which is equal to (13).

(20a)

(20b)

where (M is the input data size). The boundary conditions of an input image are extended symmetrically by , for and , for .

Figure 6. Lifting scheme for the filter.

and induce the corresponding low-pass filter and the high-pass filter .

In the prediction problem, we need to find out the relationships between the input and the low-band images. In (20b), shows the input image has nine sequential pixels connected to one wavelet coefficient in the low-band image by dotted lines in Figure 7. Here, the input image is downsampled to the low-band image in the even sequence, where is dominated by for . In order to produce the low-band image from the input image, split the input image into even sequence ( for ) for and odd sequence ( for ) for . The relations from the low-band image to the even and odd sequences of the input image are given by Figure 8.

(21a)

and

(21b)

respectively, where and and have constraints as below

(22a)

and

(22b)

Figure 7. Relationship between the input image and the low-band image for the even sequence of input image.

Figure 8. Relation from the low-band image to the input image for the even sequence and the odd sequence.

Then, substituting , in (21a) and (21b) to , in (20a) and (20b) yields

(23a)

(23b)

which implies the low-pass prediction filter and the high-pass prediction filter are respectively given by

(24a)

(24b)

The prediction filters and should be symmetric and the low-pass wavelet filter of is dominated by the intermediate coefficient (46/64 in (20b)), thus they have extra constraints as following

(25a)

(25b)

(25c)

(25d)

(25e)

(25f)

By setting , , , , and , , , empirically as one solution for general images, one has

(26a)

(26b)

As a result, the unknown subbands ( , , ) can be constructed by substituting to I and to J in (19a)-(19c).

If we know the actual high-frequency coefficients of the reconstructed image, then we can build the relationship between actual high-frequency coefficients and estimated high-frequency coefficients. The relationship improves prediction algorithm which is based on polynomial curve fitting. In Section 4, we denote this situation as one layer. If we do not know the actual high-frequency coefficients of the reconstructed image, we can decompose the original low-frequency image into four subbands as layer two in wavelet transform of the reconstructed image. And we use LL in layer two to predict the other three high-frequency subbands in layer two. Then, we can build the relationship between actual high-frequency coefficients and estimated high-frequency coefficients in layer two to improve prediction algorithm on polynomial curve fitting. In Section 4, we denote this situation as two layers. By denoting the exact coefficients at position in LH as and the estimated coefficients at position in LH as . Applying to all coefficients of LH, we can obtain the weights and by

(27)

These weights are subsequently used to gain accuracy of the coefficients of the estimated LH subband. Then, we round-off the result to get the integer version of wavelet coefficients of LH.

, (28)

where is the improved integer coefficient at position .

A similar process is possible for HL and HH subbands. After coefficient improvement, , and are carried out as above, then the high-resolution image is obtained by applying the inverse integer wavelet transform. Table 1

Table 1. Prediction filters of all integer wavelet filters used in this paper.

shows prediction filters of all integer wavelet filters used in this paper.

4. Experimental Results

In this paper, we evaluate the quality of the proposed interpolation method. The test images are shown in Figure 9. Lena has various types of image components, Baboon has many detailed components, Barbara has mainly diagonal edges, Boat has mainly horizontal edges, and Peppers has strongly vertical edges. Medical 1 and Medical 2 are x-ray images of the breast and the tooth, respectively. These images (Lena, Baboon, Barbara, Boat, Peppers) are from the USC image database [22] . Color images are transformed to gray images by Matlab function rgb2gray. All the experiments are done on a PC with AMD Phenom(tm) II × 4955 Processor 3.20 GHz with 4.00 GB ram, and implemented using MATLAB R2013a codes. To evaluate the objective quality of the reconstructed high-resolution image based on the proposed method, the peak signal noise ratio (PSNR) measurement is manipulated. The LL-band of images is totally stored, and it is used to recover to the original size of images.

Tables 2-8 show that the proposed interpolation method reconstructed for test images in each integer wavelet transform for a zooming ratio 1/2. These test images are reduced by the different integer wavelet transforms and then the reduced images are enlarged with the proposed method. One layer in the table represents that the original input images are decomposed into one layer and the LL-band in layer one is used to predict the other high frequency subbands. Then build the linear relations between predicted high frequency coefficients in layer one and actual high frequency coefficients in layer one to reconstruct the original images. Two layers in the table represent that the original input images are decomposed into two layers and the LL-band in layer two is used to predict the other high frequency subbands. Then build the linear relations between predicted high frequency coefficients in layer two and actual high frequency coefficients in layer two to reconstruct the original images. Tables 2-8 show that the linear relationship built from one layer decomposition and two layers decomposition has similar effects.

Tables 9-15 show that reconstructed images using proposed method in different wavelet transforms and different integer wavelet transforms which are compared with the original image in PSNR. Tables 9-15 present that the estimated high-frequency coefficients in floating-point are better than the estimated high-frequency coefficients in integer, but the advantages in compression for integer wavelet transform can let us tolerate the distortion in integer.

The proposed method is compared with other interpolation methods, such as, zero padding, [11] without evolutionary programming (EP), [11] with EP, DT-CWT-NLM [14] and SWT-DWT [15] . The methodologies in [11] are only for and analysis filters, so we compare the results with and analysis filters between the methodologies in [11] and the proposed algorithm. First, we show PSNR values of different prediction algorithms

Figure 9. Test natural images (a) Lena (b) Baboon (c) Barbara (d) Boat (e) Peppers (f) Medical 1 (g) Medical 2.

Table 2. PSNR (in dB) of proposed method in different integer wavelet transforms in two layers and one layer for Lena.

Table 3. PSNR (in dB) of proposed method in different integer wavelet transforms in two layers and one layer for Baboon.

Table 4. PSNR (in dB) of proposed method in different integer wavelet transforms in two layers and one layer for Barbara.

Table 5. PSNR (in dB) of proposed method in different integer wavelet transforms in two layers and one layer for Boat.

Table 6. PSNR (in dB) of proposed method in different integer wavelet transforms in two layers and one layer for Peppers.

Table 7. PSNR (in dB) of proposed method in different integer wavelet transforms in two layers and one layer for Medical 1.

Table 8. PSNR (in dB) of proposed method in different integer wavelet transforms in two layers and one layer for Medical 2.

Table 9. PSNR (in dB) of proposed method by different wavelet transforms in floating-point and in integer for Lena.

Table 10. PSNR (in dB) of proposed method by different wavelet transforms in floating-point and in integer for Baboon.

Table 11. PSNR (in dB) of proposed method by different wavelet transforms in floating-point and in integer for Barbara.

Table 12. PSNR (in dB) of proposed method by different wavelet transforms in floating-point and in integer for Boat.

Table 13. PSNR (in dB) of proposed method by different wavelet transforms in floating-point and in integer for Peppers.

Table 14. PSNR (in dB) of proposed method by different wavelet transforms in floating-point and in integer for Medical 1.

Table 15. PSNR (in dB) of proposed method by different wavelet transforms in floating-point and in integer for Medical 2.

for wavelet filter with zooming ratio 1/2 in Table 16. These test images are reduced by the wavelet transform in one layer and then the reduced images are enlarged with corresponding methods respectively. Then, we compare different prediction algorithms with wavelet filter for zooming ratio 1/2 in Table 17. These test images are reduced by the wavelet transform in one layer and then the reduced images are enlarged with corresponding methods respectively.

The results for wavelet filter in Table 16 show that the proposed method outperforms other methods for Lena, Baboon, Barbara, Boat and Medical 1. Because the parameters of the proposed method derived in Section 3 assume the

Table 16. Comparison with different methods in zooming ratio 1/2 for filter in PSNR (in dB).

Table 17. Comparison with different methods in zooming ratio for filter in PSNR (in dB).

input images are continuous, so it is suitable to predict images which are smooth and soft, and not so well with images with a lot of edges and high frequency components. Peppers and Medical 2 have worse results because of the continuous assumption. By applying the linear relation to tune the coefficients in the proposed method, it reconstructs better results. Literature [11] with EP has better performance than the proposed method for Peppers and Medical 2. The results for wavelet filter in Table 17 show that the proposed method outperforms other methods for Lena, Baboon, Barbara and Medical 1. Boat, Peppers, Medical 2 have worse results because of the continuous assumption. But, the methodology [11] with EP needs more computational time to obtain the better results and it is used to verify the method in [11] without EP closes to optimal solution. The approach in [14] is used in satellite images; DTCWT can preserve more high-frequency components of original images. The interpolation algorithm used in this method does not estimate the high-frequency information, so it cannot obtain a better performance.

One can find which filter is the best in different condition from Table 18. Using high-order wavelet analysis filter can lead to a better performance than low-order wavelet analysis filter, because high-order wavelet analysis filter has higher vanishing moments. Consequently, the reconstructed high-order filtered signal will produce a better approximation and get a better reconstruction result.

Figure 10 and Figure 11 present the subjective image quality of the proposed

Figure 10. Image Lena reconstructed by bilinear, bicubic, zero padding and the proposed algorithm.

Figure 11. Image Baboon reconstructed by bilinear, bicubic, zero padding and the proposed algorithm.

Table 18. Best wavelet filter of different conditions using proposed method for different filters and images.

algorithm. Figure 10 and Figure 11 show the proposed method is sharper than zero padding method and show the bilinear and bicubic methods blur the images.

4. Conclusion

In this paper, wavelet-based image interpolation for high performance image resolution enhancement is proposed to predict the detail coefficients in the original image from the low-pass filtered image by observing the wavelet transform in lifting scheme. The proposed method can predict the vertical and horizontal subbands and the diagonal subband, so the proposed method is quite suitable to reconstruct the original image. Then, we utilize polynomial curve fitting to build the linear relationships between the actual high frequency subbands and the predicted high frequency subbands. These relationships improve the accuracy of prediction algorithm. Reconstructed images by the proposed method are also in the form of integers. Experimental results compare the performances of different integer wavelet transforms with the proposed method in some well-known natural images. The proposed methods have been shown better performance than bilinear, bicubic, and other wavelet-based methods.

Acknowledgements

This work was supported by the Ministry of Science and Technology of Republic of China under contacts, MOST 107-2221-E-006-203-MY2 and MOST 105-2221-E-006-102-MY3.

Conflicts of Interest

The authors declare no conflicts of interest regarding the publication of this paper.

Cite this paper

Chen, C.-Y., Guo, S.-M., Tsai, C.-H. and Tsai, J.S.-H. (2018) Integer Wavelet-Based Image Interpolation in Lifting Structure for Image Resolution Enhancement. Applied Mathematics, 9, 1156-1178. https://doi.org/10.4236/am.2018.910077

References

- 1. Lehmann, T.M., Gonner, C. and Spitzer, K. (1999) Survey: Interpolation Methods in Medical Image Processing. IEEE Transactions on Medical Imaging, 18, 1049-1075. https://doi.org/10.1109/42.816070

- 2. Keys, R.G. (1981) Cubic Convolution Interpolation for Digital Image Processing. IEEE Transactions on Acoustics, Speech and Signal Processing, 29, 1153-1160. https://doi.org/10.1109/TASSP.1981.1163711

- 3. Guo, S.M., Hsu, C.Y., Shih, G.C. and Chen, C.W. (2011) Fast Pixel-Size-Based Large-Scale Enlargement and Reduction of image: Adaptive Combination of bilinear Interpolation and Discrete Cosine Transform. Journal of Electronic Imaging, 20, Article ID: 033005. https://doi.org/10.1117/1.3603937

- 4. Carey, W.K., Chuang, D.B. and Hemami, S.S. (1999) Regularity-Preserving Image Interpolation. IEEE Transactions on Image Processing, 8, 1293-1297. https://doi.org/10.1109/83.784441

- 5. Temizel, A. and Vlachos, T. (2006) Wavelet Domain Image Resolution Enhancement. IEE Proceedings—Vision, Image and Signal Processing, 153, 25-30. https://doi.org/10.1049/ip-vis:20045056

- 6. Piao, Y., Shin, I.H. and Park, H.W. (2007) Image Resolution Enhancement Using Inter-Subband Correlation in Wavelet Domain. IEEE International Conference on Image Processing, 1, 445-448.

- 7. Kinebuchi, K., Muresan, D.D. and Parks, T.W. (2001) Image Interpolation Using Wavelet-Based Hidden Markov Trees. IEEE International Conference on Acoustics, Speech, and Signal Processing, 3, 1957-1960.

- 8. Temizel, A. (2007) Image Resolution Enhancement Using Wavelet Domain Hidden Markov Tree and Coefficient Sign Estimation. IEEE International Conference on Image Processing, 5, 381-384.

- 9. Kim, S.S., Kim, Y.S. and Eom, I.K. (2009) Image Interpolation Using MLP Neural Network with Phase Compensation of Wavelet Coefficients. Neural Computing and Applications, 18, 967-977. https://doi.org/10.1007/s00521-009-0233-7

- 10. Lee, W.L., Yang, C.C., Wu, H.T. and Chen, M.J. (2009) Wavelet-Based Interpolation Scheme for Resolution Enhancement of Medical Images. Journal of Signal Processing Systems, 55, 251-265. https://doi.org/10.1007/s11265-008-0206-6

- 11. Guo, S.M., Lai, B.W., Chou, Y.C. and Yang, C.C. (2011) Novel Wavelet-Based IMAGE Interpolations in Lifting Structures for Image Resolution Enhancement. Journal of Electronic Imaging, 20, Article ID: 033007. https://doi.org/10.1117/1.3606587

- 12. Demirel, H. and Anbarjafari, G. (2011) Discrete Wavelet Transform-Based Satellite Image Resolution Enhancement. IEEE Transactions on Geoscience and Remote Sensing, 49, 1997-2004. https://doi.org/10.1109/TGRS.2010.2100401

- 13. Chavez-Roman, H., Ponomaryov, V. and Peralta-Fabi, R. (2012) Image Super Resolution Using Interpolation and Edge Extraction in Wavelet Transform Space. International Conference on Electrical Engineering, Computing Science and Automatic Control (CCE), Mexico City, 26-28 September 2012, 1-6.

- 14. Iqbal, M.Z., Ghafoor, A. and Siddiqui, A.M. (2013) Satellite Image Resolution Enhancement Using Dual-Tree Complex Wavelet Transform and Nonlocal Means. IEEE Geoscience and Remote Sensing Letters, 10, 451-455. https://doi.org/10.1109/LGRS.2012.2208616

- 15. Demirel, H. and Anbarjafari, G. (2011) Image Resolution Enhancement by Using Discrete and Stationary Wavelet Decomposition. IEEE Transactions on Image Processing, 20, 1458-1460. https://doi.org/10.1109/TIP.2010.2087767

- 16. Divya Lakshmi, M.S. (2013) Robust Satellite Image Resolution Enhancement Based on Interpolation of Stationary Wavelet Transform. International Journal of Scientific and Engineering Research, 4, 1365-1369.

- 17. Calderbank, R.C., Daubechies, I., Sweldens, W. and Yeo, B.L. (1998) Wavelet Transforms That Map Integers to Integers. Applied and Computational Harmonic Analysis, 5, 332-369. https://doi.org/10.1006/acha.1997.0238

- 18. Bilgin, A., Zweig, G. and Marcellin, M.W. (2000) Three-Dimensional Image Compression with Integer Wavelet Transforms. Applied Optics, 39, 1799-1814. https://doi.org/10.1364/AO.39.001799

- 19. Xiong, Z., Wu, X., Cheng, S. and Hua, J. (2003) Lossy-to-Lossless Compression of Medical Volumetric Data Using Three-Dimensional Integer Wavelet Transforms. IEEE Transactions on Medical Imaging, 22, 459-470. https://doi.org/10.1109/TMI.2003.809585

- 20. Mallat, S.G. (1989) A Theory for Multiresolution Signal Decomposition: The Wavelet Representation. IEEE Transactions on Pattern Analysis and Machine Intelligence, 11, 674-693. https://doi.org/10.1109/34.192463

- 21. Daubechies, I. and Sweldens, W. (1998) Factoring Wavelet Transforms into Lifting Steps. Journal of Fourier Analysis and Applications, 4, 247-269. https://doi.org/10.1007/BF02476026

- 22. Test Images. http://sipi.usc.edu/database/

上一篇:Ordered Rate Constitutive Theo 下一篇:Fuzzy Modelling for Predicting

最新文章NEWS

- Ordered Rate Constitutive Theories for Non-Classical Thermoviscoelastic Fluids with Internal Rotatio

- Modeling and Numerical Solution of a Cancer Therapy Optimal Control Problem

- Extended Wiener Measure by Nonstandard Analysis for Financial Time Series

- Methodology for Constructing a Short-Term Event Risk Score in Heart Failure Patients

- Application of Conjugate Gradient Approach for Nonlinear Optimal Control Problem with Model-Reality

- On the Dynamics of Transition of a Classical System to Equilibrium State

- On the ECI and CEI of Boron-Nitrogen Fullerenes

- Binomial Hadamard Series and Inequalities over the Spectra of a Strongly Regular Graph

推荐期刊Tui Jian

- Chinese Journal of Integrative Medicine

- Journal of Genetics and Genomics

- Journal of Bionic Engineering

- Pedosphere

- Chinese Journal of Structural Chemistry

- Nuclear Science and Techniques

- 《传媒》

- 《中学生报》教研周刊

热点文章HOT

- On Existence of Periodic Solutions of Certain Second Order Nonlinear Ordinary Differential Equations

- Ordered Rate Constitutive Theories for Non-Classical Thermoviscoelastic Fluids with Internal Rotatio

- Application of Conjugate Gradient Approach for Nonlinear Optimal Control Problem with Model-Reality

- Fuzzy Modelling for Predicting the Risk of Recurrence and Progression of Superficial Bladder Tumors

- Integer Wavelet-Based Image Interpolation in Lifting Structure for Image Resolution Enhancement

- An Alternative Analysis on Nilsson-Ehle’s Hybridization Experiment in Wheat —Theory of Dual Multiple

- Modeling and Numerical Solution of a Cancer Therapy Optimal Control Problem

- Extended Wiener Measure by Nonstandard Analysis for Financial Time Series